Google’s I/O 2024 keynote was almost entirely filled with AI announcements detailing where and how its Gemini chatbot will be used across much of the company’s suite of products. The mid-year show is traditionally where you see a lot of software updates and product presentations, but the intensive focus on how AI will improve Gmail, Search and other products beyond consumers use daily is a warning shot for competitors.

That includes Apple, which is scheduled to hold its own software-focused WWDC keynote on June 10. The company behind the iPhone has kept its AI plans close to the vest, with CEO Tim Cook sharing vague references to what it has in store for the year during earnings calls. Aside from the surprise release of its big OpenELM language model last month, we know very little about what will happen at WWDC 2024.

But Google’s AI showcase gives Apple plenty of competition. The tech giant focused heavily on how its Gemini chatbot would integrate and enhance existing services in a way that leverages its software strengths, including using AI to analyze Gmail and photos.

Here are all the areas where Google has the most advantages and where Apple will struggle to keep up.

Learn more: AI was Google’s biggest I/O “product.” Tech keynotes will never be the same

Look at this: Everything Google just announced at I/O 2024

Google leads: will Apple follow?

This Google I/O was a transition. Google has apparently replaced Google Assistant with Gemini – and in the future, with the not-yet-public Project Astra, a new AI initiative capable of scanning what your phone’s camera sees and offering advice (in one example shown during I/O, reminding someone where they left their glasses, which the phone had seen half a minute earlier).

It’s easy to see Apple overloading Siri with AI to be more helpful, or even asking the virtual assistant to be more active in noticing surroundings and making suggestions. But Apple has also put user privacy at the forefront of its design decisions. So it’s hard to imagine the company following Google’s path for more personalization at the cost of exposing users’ habits and environments.

Similarly, another feature offered by Google allows Gemini to listen to phone calls and notify users when it detects likely fraudulent activity. For example, a caller would be notified when asked to transfer money. Even if this detection happens on-device, as Google claims, it seems like a bridge too far for Apple to so intrusively monitor the behavior of iPhone owners – especially after the company gave up its CSAM detection proposal years ago following backlash from privacy advocates.

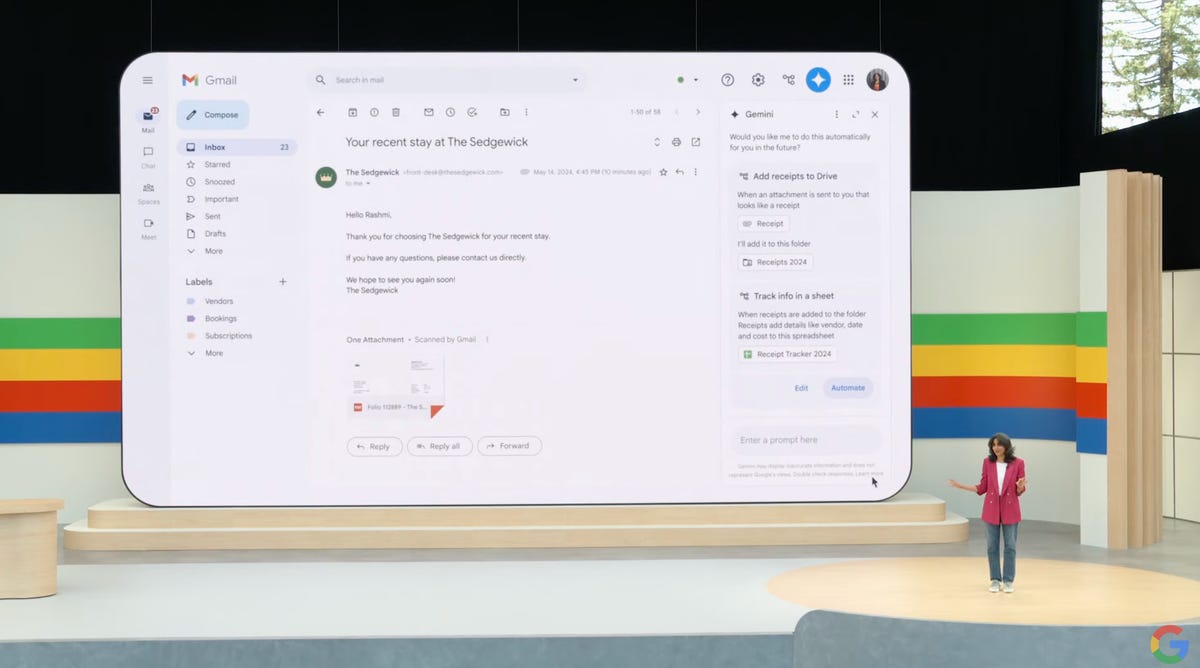

One of Google’s greatest strengths is using AI tools directly in its software suite. People can ask Gemini to analyze documents and photos. It can also sort your Gmail, including managing busy tasks. Google showed an example of someone using it to gather receipts located in emails, paste them into a folder, and create a spreadsheet. Gemini will also suggest a trio of canned responses tailored to each email to give quick responses.

Sorting emails doesn’t seem too difficult for Apple to imitate through its Mail app, and having iOS or MacOS search its operating system for more intelligent, context-aware results wouldn’t be too complicated either. . But more complex queries, like those shown at Google I/O highlighting photos and videos showing a child’s growing ability to swim, seem to require Apple to have a generative AI chatbot like Gemini. Something like ChatGPT, which OpenAI is reportedly in talks with Apple to bring to iPhones, Bloomberg reported.

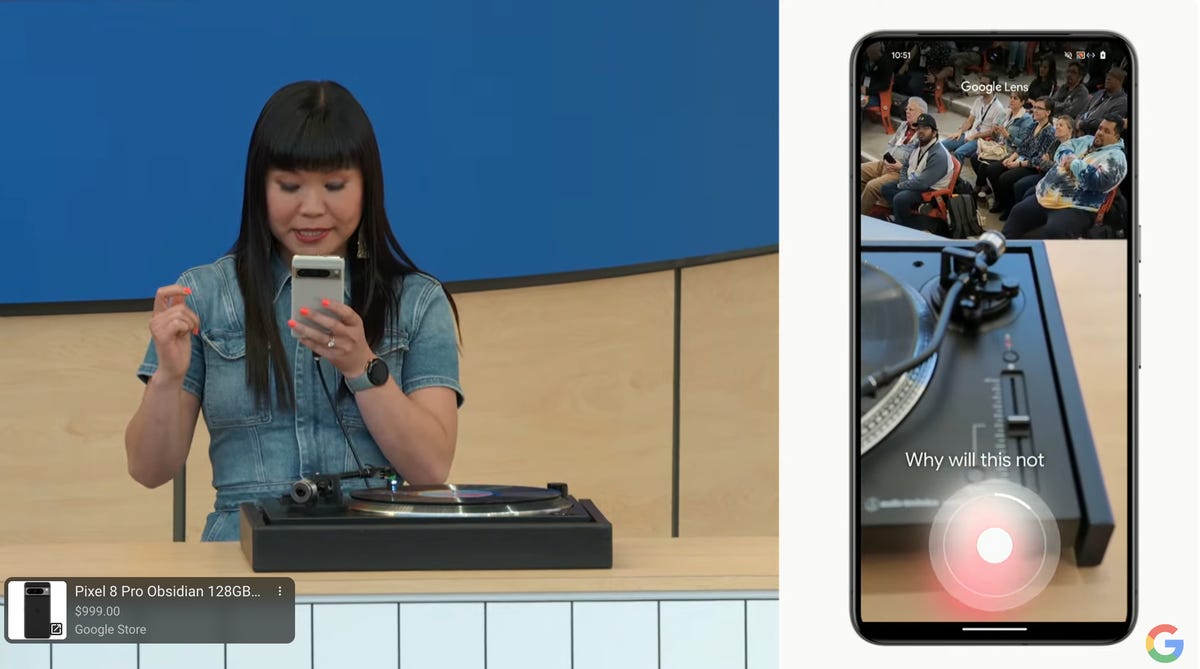

Google also had a lot to introduce for search, including the AI Overview pane that delivers processed results of whatever you searched for. On mobile, Google Lens has a new feature to add voice questions when recording a video with the app. On the Google I/O stage, VP of Product Rose Yao asked why “this isn’t working” by pointing to an unusable part of a record player, which the app identified and offered results search engine with a list of suggestions assembled by the AI. fixes.

Google Vice President Rose Yao demonstrates her new skills with Google Lens.

Apple hasn’t yet considered adding AI to Safari, much less integrating the smart camera’s object identification into search results. Same with Google’s other mobile feature that debuted in the Samsung Galaxy S24, Circle to Search, which searches for anything on your phone’s screen around which you draw a circle using your finger or of your stylus. iPhone owners can crudely access this feature with a lengthy workaround, but it’s nowhere near as seamless as on Android phones.

Google also showed off a generative AI application for accessibility, an area where Apple has held the advantage for some time. Gemini Nano, the miniature version of the chatbot for phones, will have enhanced its existing TalkBack screen reader to vocalize what’s on the screen, giving Apple an edge on its accessibility dominance. Gemini multimodality will essentially add descriptions to images in the vein of alt text on the fly.

Then there’s of course the upcoming Android 15 update. Gemini is even more integrated into the operating system, with a feature called Gems, which are miniature versions of the chatbot dedicated to unique tasks. You can create gems, for example, to create (and encourage) new workout routines each day, or to guide you in preparing complex meals. Android 15 also uses chatbot to power Live, a feature enabling live voice chats with AI to ask questions and get natural responses. This represents a lot of features that Apple may not be able to imitate in iOS 18.

What Apple might be furthest behind on, if it competes directly, is Google’s additional ways of using generative AI to create audio and video, as well as improve its generation of images. Apple prides itself on making creativity easier, so it would be curious to know if the company plans to integrate generative AI into software like Photos, Final Cut, and iMovie.

Google presenters said the phrase “AI” more than 120 times on stage at Google I/O 2024. They said nothing about hardware.

Apple is playing to its strengths?

Although AI took up the entire Google I/O keynote, there were still many dark spaces in the corporate realm where AI’s light did not reach. Past I/O software mainstays like Maps and Google Suite were nowhere to be found, and there was no mention of upcoming Pixel hardware. Aside from the already announced Pixel 8A, which Google appears to have unveiled last week to clear the AI decks, we haven’t heard anything about new flagship Pixel phones, another Pixel Fold, or a follow-up of the Pixel Watch 2.

Learn more: Lost in AI jargon: Google’s big event was clear as mud

This leaves some room for Apple to make a big presentation at WWDC. We can expect the company to follow up with obvious AI integrations – in Siri, in iOS 18 and other OS updates, and perhaps helping with navigation or search for documents.

Additionally, Google I/O didn’t have a single noteworthy AI feature to announce among its many incremental improvements and additions, leaving the door open for Apple to make headlines if it had something truly exceptional in waiting in the wings.

What that could be is hard to imagine. Apple is late in the AI race and needs to introduce new features and convince its users to ensure its products remain distinctive from their competitors.

Look at this: I tried Google’s Astra project

Editor’s note: CNET used an AI engine to create several dozen stories, labeled accordingly. The note you are reading is attached to articles that cover the topic of AI in depth, but are created entirely by our expert editors and editors. To learn more, see our AI policy.