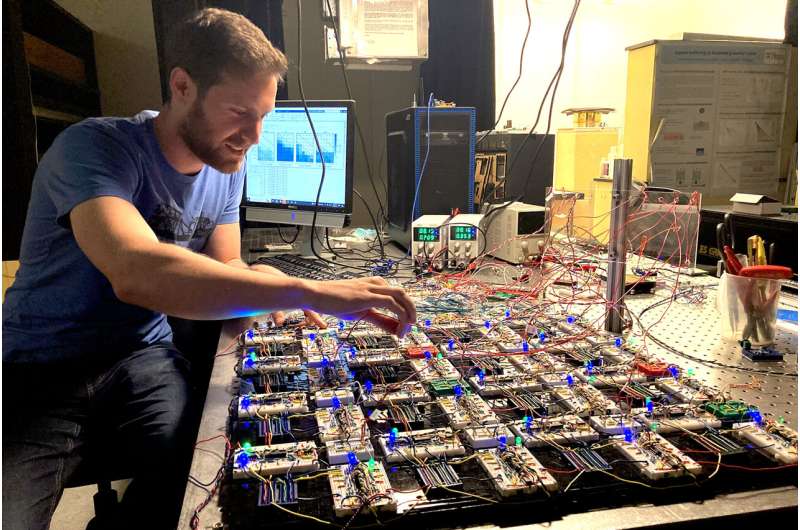

Sam Dillavou, a postdoctoral fellow in the Durian research group in the School of Arts and Sciences, built the components of this local contrastive learning network, a fast, low-power, scalable analog system that can learn nonlinear tasks. Credit: Erica Moser

Scientists face many tradeoffs when trying to build and evolve brain-like systems that can perform machine learning operations. For example, artificial neural networks are capable of learning complex language and vision tasks, but the process of teaching computers to perform these tasks is slow and requires a lot of power.

Training machines to learn digitally but perform tasks analogically (meaning the input varies based on a physical quantity, such as voltage) can reduce time and energy consumption, but small errors can quickly add up.

A previous electrical network designed by physics and engineering researchers at the University of Pennsylvania is more scalable because errors do not multiply in the same way as the system size increases, but it is severely limited because it can only learn linear tasks, those with a simple relationship between input and output.

The researchers have now created an analog system that is fast, energy-efficient, scalable, and capable of learning more complex tasks, including exclusive-or (XOR) relationships and nonlinear regression. This is called a contrastive local learning network; the components evolve on their own based on local rules without knowledge of the larger structure.

Physics professor Douglas J. Durian compares this phenomenon to how neurons in the human brain don’t know what other neurons are doing and yet learning emerges.

“It can learn, in a machine learning sense, to perform useful tasks, similar to a computer neural network, but it’s a physical object,” says physicist Sam Dillavou, a postdoctoral fellow in the Durian Research Group and first author of a paper on the system published in Proceedings of the National Academy of Sciences.

“One of the things that we’re really excited about is that because it has no knowledge of the structure of the network, it’s very error-tolerant, it’s very robust, and it can be fabricated in a variety of ways, and we think that opens up a lot of possibilities for evolving these things,” says engineering professor Marc Z. Miskin.

“I think it’s an ideal model system that we can study to better understand all sorts of problems, including biological ones,” says Andrea J. Liu, professor of physics. She adds that it could also be useful for interfacing with devices that collect data that need processing, such as cameras and microphones.

In the paper, the authors claim that their self-learning system “offers a unique opportunity to study emergent learning. Compared with biological systems, including the brain, our system relies on simpler and well-understood dynamics, is precisely trainable, and uses simple modular components.”

This research builds on the coupled learning framework that Liu and postdoctoral fellow Menachem (Nachi) Stern designed, publishing their results in 2021. In this paradigm, a physical system that is not designed to perform a certain task adapts to applied inputs to learn the task, while using local learning rules and no centralized processor.

Dillavou says he came to Penn specifically for this project, and he worked on translating the simulation framework to its current physical design, which can be realized using standard circuit components.

“One of the craziest things about this system is that it learns by itself; we just set it up to work,” Dillavou says. The researchers only feed the input voltages, and then the transistors that connect the nodes update their properties based on the coupled learning rule.

“Because the way it computes and learns is based on physics, it’s much more interpretable,” Miskin says. “You can actually understand what it’s trying to do because you have a good idea of the underlying mechanism. That’s pretty unique because a lot of other learning systems are black boxes where it’s much harder to know why the network did what it did.”

Durian says he hopes this “is the beginning of a huge field,” noting that another postdoc in his lab, Lauren Altman, is building mechanical versions of contrastive local learning networks.

The researchers are currently working on improving the design, and Liu says there are many questions about how long the memory will last, the effects of noise, the best architecture for the network, and whether there are better forms of nonlinearity.

“It’s not really clear what changes as we develop a learning system,” Miskin says.

“If you think about a brain, there’s a huge gap between an earthworm with 300 neurons and a human being, and it’s not clear where those capabilities come from, or how things evolve as you grow. Having a physical system that you can make bigger and bigger and bigger is an opportunity to really study that.”

More information:

Sam Dillavou et al, Processorless machine learning: Emergent learning in a nonlinear analog network, Proceedings of the National Academy of Sciences (2024). DOI: 10.1073/pnas.2319718121

Provided by the University of Pennsylvania

Quote:A first physical system to learn nonlinear tasks without a traditional computer processor (2024, July 8) retrieved July 8, 2024 from https://techxplore.com/news/2024-07-physical-nonlinear-tasks-traditional-processor.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without written permission. The content is provided for informational purposes only.