Keeping pace with a rapidly evolving industry like AI is a daunting challenge. Until an AI can do it for you, here’s a handy roundup of recent stories in the world of machine learning, as well as notable research and experiments that we haven’t covered on our own.

By the way, TechCrunch plans to launch an AI newsletter soon. Stay tuned. In the meantime, we’re increasing the cadence of our semi-regular AI column from twice a month (or so) to weekly – so be on the lookout for more editions.

This week in AI, OpenAI once again dominated the news cycle (despite Google’s best efforts) with a product launch, but also some palace intrigue. The company unveiled GPT-4o, its most capable generative model yet, and days later effectively disbanded a team working on the problem of developing controls to prevent “superintelligent” AI systems from becoming thugs.

The team’s dismantling made headlines, as one might expect. Reports, including our own, suggest that OpenAI deprioritized the team’s security research in favor of launching new products like the aforementioned GPT-4o, ultimately leading to the resignation of both co-leads of the team, Jan Leike and OpenAI co-founder Ilya Sutskever.

Superintelligent AI is more theoretical than real at this point; It’s unclear when – or if – the tech industry will make the breakthroughs needed to create AI capable of performing any task that a human can. But this week’s media coverage seems to confirm one thing: OpenAI executives, particularly CEO Sam Altman, have increasingly chosen to prioritize products over warranties.

Altman reportedly “infuriated” Sutskever by rushing the launch of AI-based features at OpenAI’s first developer conference last November. And he reportedly criticized Helen Toner, director of Georgetown’s Center for Security and Emerging Technologies and a former OpenAI board member, over a paper she co-authored that outlined the approach to OpenAI security in a critical light – to the point where it tried to push it out of the picture.

Over the past year, OpenAI has let its chatbot store fill with spam and (allegedly) scraped data from YouTube in violation of the platform’s terms of service, while expressing ambition to let its AI generate depictions of porn and gore. Certainly, security seems to have taken a back seat at the company – and a growing number of OpenAI security researchers have come to the conclusion that their work would be better supported elsewhere.

Here are some other interesting AI stories from recent days:

- OpenAI + Reddit: For more on OpenAI, the company struck a deal with Reddit to use data from the social site for training AI models. Wall Street welcomed the deal with open arms – but Reddit users may not be so pleased.

- Google’s AI: Google held its annual I/O Developer Conference this week, where it debuted a ton of AI products. We’ve rounded them up here, from the Veo video generator to AI-curated results in Google Search to upgrades to Google’s Gemini chatbot apps.

- Anthropic hires Krieger: Mike Krieger, one of the co-founders of Instagram and, more recently, co-founder of personalized news app Artifact (which TechCrunch parent company Yahoo recently acquired), joins Anthropic as the company’s first director of product. He will oversee both the company’s consumer and business efforts.

- AI for children: Anthropic announced last week that it would begin allowing developers to create apps and tools aimed at children based on its AI models, as long as they followed certain rules. In particular, competitors like Google prohibit their AI from being integrated into applications intended for young people.

- At the film festival: AI startup Runway held its second AI film festival earlier this month. Takeaway meals? Some of the most powerful moments in the showcase came not from the AI, but from more human elements.

More machine learning

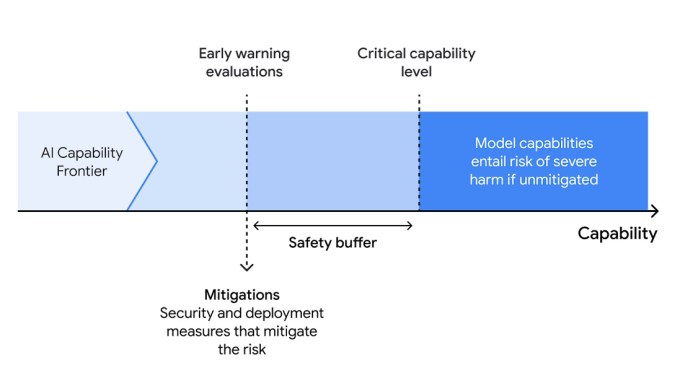

AI safety is obviously a priority this week with OpenAI’s departures, but Google Deepmind is moving forward with a new “Frontier Safety Framework.” Basically, it is the organization’s strategy to identify and hopefully prevent uncontrolled capacity: it doesn’t have to be AGI, it can be ‘a malware generator gone crazy or something.

The framework has three steps: 1. Identify potentially harmful capabilities in a model by simulating its development paths. 2. Regularly evaluate models to detect when they have reached known “critical capacity levels.” 3. Apply a mitigation plan to prevent exfiltration (by another or itself) or problematic deployment. There are more details here. This may seem like a series of obvious actions, but it’s important to formalize them, otherwise everyone will bother. This is how you get bad AI.

A rather different risk has been identified by researchers at Cambridge, who are rightly concerned about the proliferation of chatbots being trained on a deceased person’s data in order to provide a superficial simulacrum of that person. You may (like me) find the whole concept somewhat abhorrent, but it could be used in grief management and other scenarios if we are careful. The problem is that we don’t pay attention.

“This area of AI is an ethical minefield,” said lead researcher Katarzyna Nowaczyk-Basińska. “We need to start thinking now about how to mitigate the social and psychological risks of digital immortality, because the technology is already here.” The team identifies many scams, their potential bad and good outcomes, and discusses the concept in general (including fake services) in a paper published in Philosophy & Technology. Black Mirror predicts the future once again!

In less scary applications of AI, MIT physicists are looking for a useful (to them) tool to predict the phase or state of a physical system, normally a statistical task that can become onerous with more complex systems. But by training a machine learning model on the right data and basing it on some known hardware characteristics of a system, you have a significantly more efficient way to do this. Just another example of how ML is finding niches even in advanced sciences.

At CU Boulder, they are discussing how AI can be used in disaster management. This technology can be useful for quickly predicting where resources will be needed, mapping damage, and even helping to train responders, but people are (understandably) hesitant to apply it in life-or-death scenarios.

Professor Amir Behzadan is trying to move the needle in this area, arguing that “human-centered AI leads to more effective disaster response and recovery practices by fostering collaboration, understanding and inclusiveness among team members, survivors and stakeholders.” They’re still in the workshop phase, but it’s important to think deeply about this before trying, for example, to automate aid distribution after a hurricane.

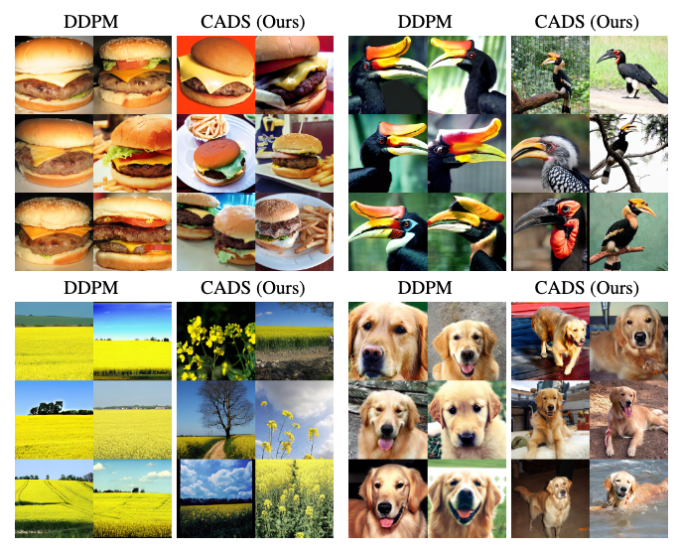

Finally, some interesting work from Disney Research, which sought to diversify the output of broadcast image generation models, which can produce similar results over and over again for certain prompts. Their solution? “Our sampling strategy anneals the conditioning signal by adding programmed, monotonically decaying Gaussian noise to the conditioning vector during inference to balance diversity and alignment of conditions.” I simply couldn’t say it better myself.

The result is a much greater diversity of angles, settings and overall appearance in image output. Sometimes you want it, sometimes you don’t, but it’s nice to have the option.